Semantic Understanding & ToF Point Cloud Detection for Mobile Robots

How Do Semantic Understanding and ToF Point Cloud Detection Improve Mobile Robot Navigation?

In modern mobile robotics, point cloud detection and semantic understanding are becoming core technologies for environmental perception and intelligent decision-making. Combined with TOF (Time-of-Flight) sensors, robots can achieve precise navigation, intelligent obstacle avoidance, and efficient task execution in complex and dynamic environments, driving advancements in industrial automation, logistics, service robots, and autonomous driving applications.

What is a Time-of-Flight (ToF) Sensor?

A Time-of-Flight (ToF) sensor measures the time it takes for light or other electromagnetic waves to travel from the sensor to an object and back. By calculating this travel time, the ToF sensor can accurately determine the distance between the sensor and the object, generating high-precision depth information.

ToF sensors are a key component in modern robots and intelligent devices for high-precision 3D perception and environment modeling. By measuring light pulse flight time, they provide real-time, accurate depth information, supporting autonomous navigation, obstacle avoidance, and intelligent interactions.

Working Principle of ToF Sensors:

-

Emit Light Pulses: The ToF sensor emits invisible infrared or laser pulses.

-

Light Reflection: The pulses reflect off object surfaces and return to the sensor.

-

Calculate Flight Time: The sensor measures the round-trip time of the pulses, and the distance is calculated using the speed of light.

-

Generate Depth Map: Multiple distance measurements are combined to create 3D depth maps or point cloud data for environmental perception and modeling.

1. Basic Concepts of Point Cloud Detection and ToF Technology

In modern mobile robots, autonomous vehicles, smart warehouses, and service robots, point cloud detection and ToF sensors are foundational for environment perception and autonomous navigation. By capturing high-precision 3D data, robots can perform real-time mapping, obstacle detection, and intelligent path planning, enabling efficient operation in complex indoor and outdoor environments.

1. Point Cloud Detection

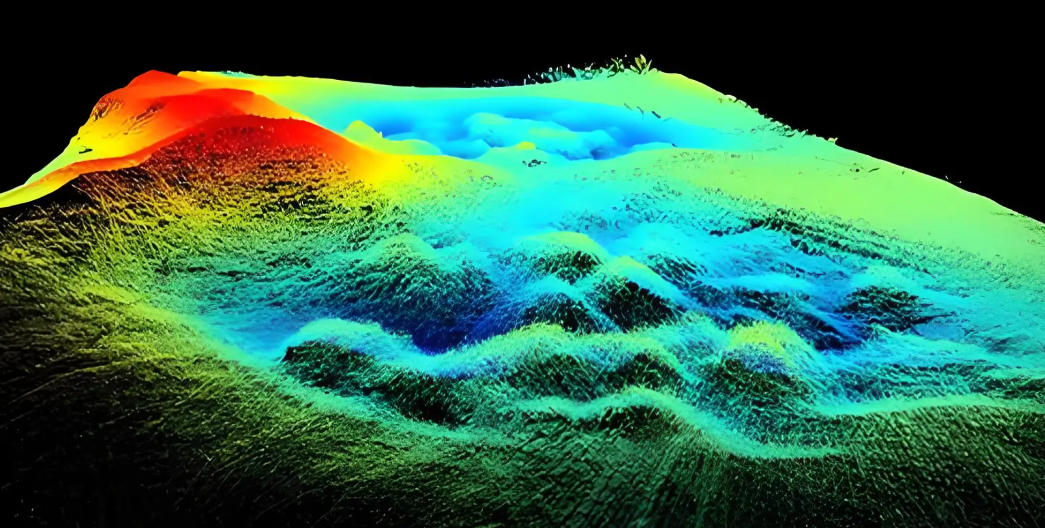

Point cloud detection uses sensors such as LiDAR, ToF, or RGB-D cameras to capture spatial coordinates of objects in the environment, generating dense 3D point cloud models. Each point represents a position on an object’s surface, and millions of such points combine to form an accurate 3D model of the surroundings.

Main Functions:

-

Obstacle Detection and Avoidance

-

Point clouds detect walls, furniture, machinery, people, vehicles, and both static and dynamic obstacles.

-

Integrated with navigation algorithms, robots can plan paths in real time for dynamic obstacle avoidance and safe movement.

-

In outdoor environments like roads, plazas, or industrial yards, point cloud detection helps identify vehicles, pedestrians, buildings, and other moving objects, supporting autonomous driving and all-terrain robot navigation.

-

-

Environment Modeling and Navigation Support

-

Point cloud data can feed SLAM (Simultaneous Localization and Mapping) systems, generating high-precision 3D maps for indoor and outdoor autonomous navigation.

-

For autonomous warehouses, inspection robots, or self-driving vehicles, point cloud mapping provides reliable environmental references for path planning.

-

-

Dynamic Monitoring and State Analysis

-

Point clouds can track object movement, enabling real-time monitoring of dynamic environments.

-

In industrial or logistics applications, point clouds analyze changes in stacked items or the position of transport vehicles, improving efficiency and safety.

-

Advantages of ToF Sensors in Point Cloud Detection:

-

High-Precision Depth Sensing: ToF measures flight time of light pulses, achieving millimeter-level accuracy, particularly in low-texture, low-light, or reflective environments.

-

Real-Time Continuous Capture: Combined with LiDAR or RGB cameras, ToF enables high-frame-rate 3D point cloud generation for navigation and rapid decision-making.

-

Efficient Data Processing: Compared to traditional LiDAR, ToF data is smaller and has lower latency, ideal for dynamic environments.

-

Indoor and Outdoor Applications: ToF point clouds provide reliable environmental perception across warehouses, factories, plazas, or roads.

2. Limitations and Challenges of Point Cloud Detection

Despite its advantages, point cloud detection faces inherent limitations:

-

Lack of Semantic Understanding

-

Point clouds mainly provide geometric information, showing only shape, position, and size.

-

They cannot distinguish object types or functions (e.g., a “table” vs. a “door”) or determine whether a door can open.

-

In intelligent warehouses, service robots, or autonomous vehicles, relying solely on geometry may lead to less intelligent path planning.

-

-

Limited Environmental Adaptability

-

Strong light, rain, snow, fog, reflective or wet surfaces can distort point cloud data or introduce noise.

-

In dynamic outdoor environments, moving obstacles (people, vehicles, animals) may cause delays in data updates, affecting navigation accuracy.

-

-

Complex and Heavy Data Processing

-

High-density point clouds contain hundreds of thousands to millions of points, requiring filtering, segmentation, feature extraction, and registration before use in SLAM or path planning.

-

High computational resources are needed; traditional methods may not achieve real-time performance, necessitating GPU acceleration, deep learning, or edge computing.

-

-

High Demand for Sensor Fusion

-

Single point cloud data is insufficient for complex perception. Integration with RGB cameras, ToF, and IMU data is often required.

-

Fusion techniques are complex but significantly improve perception accuracy and navigation stability for indoor and outdoor autonomous navigation.

-

3. Trends and Optimization Directions

-

ToF + Point Cloud + Semantic Understanding Fusion

-

Use deep learning algorithms for semantic segmentation of point clouds to recognize object types and functions, enabling robots to not only “see” but also “understand” objects.

-

-

Dynamic Environment Adaptation

-

Fuse ToF point cloud and visual data to recognize and predict pedestrians, vehicles, and moving obstacles, improving outdoor navigation reliability.

-

-

Efficient Processing and Edge Computing

-

Use GPU or AI chips to accelerate point cloud processing, enabling mobile robots to achieve millisecond-level responses in complex environments.

-

II. Integration of Semantic Understanding and ToF Sensors

With the development of mobile robots, autonomous driving, and smart warehouse technologies, relying solely on point clouds or visual data is no longer sufficient for intelligent navigation and task execution in complex environments. Semantic understanding enables robots not only to “see” the environment but also to comprehend its meaning, allowing for intelligent decision-making and autonomous operations. ToF (Time-of-Flight) sensors play a crucial role in this process by providing high-precision depth data that enhances semantic recognition and environmental perception.

1. Core Role of Semantic Understanding

Semantic understanding leverages deep learning, computer vision, and 3D point cloud data to enable robots to recognize, classify, and understand objects and scenes in their environment. Key benefits include:

-

Object Recognition and Classification

-

Robots can identify specific objects, such as tables, chairs, shelves, or operable devices, understanding both their location and functional attributes.

-

In warehouse environments, robots can distinguish different types of goods for intelligent picking; in service scenarios, robots can identify doors, windows, or handrails and plan optimal paths.

-

-

Environmental Semantic Analysis

-

Differentiates traversable paths from non-passable areas, such as doors vs. walls, stairs vs. flat surfaces.

-

Predicts movement of dynamic objects (people, vehicles) versus static objects (walls, furniture), supporting intelligent obstacle avoidance and path optimization.

-

-

Intelligent Task Planning

-

Robots can autonomously select optimal routes, adjust operational strategies, and collaborate with other robots based on environmental semantics.

-

Enhances efficiency and safety in industrial, logistics, inspection, or service scenarios.

-

2. Key Role of ToF Sensors in Semantic Understanding

ToF sensors measure the time it takes for light pulses to travel from the sensor to an object and back, generating high-precision depth information and providing the 3D spatial foundation for semantic understanding. Combined with semantic analysis, ToF offers the following advantages:

-

Accurate Depth Perception

-

Provides millimeter-level depth data and high-resolution 3D point clouds, supporting semantic segmentation and object recognition.

-

Effective in low-texture or uniform environments (e.g., blank walls or smooth floors), supplementing visual data to ensure accurate environmental modeling.

-

-

Support in Low-Light or Complex Lighting Conditions

-

Visual cameras may struggle at night or in high-contrast lighting.

-

ToF sensors do not rely on ambient light and provide reliable depth information, maintaining stable semantic understanding in challenging lighting conditions.

-

-

Real-Time Dynamic Obstacle Tracking

-

Rapidly captures 3D positions and velocities of moving objects (people, vehicles, other robots), aiding dynamic obstacle avoidance.

-

In warehouses, factories, or outdoor roads, combined with semantic understanding, robots can predict obstacle trajectories and optimize path planning.

-

-

Multi-Sensor Data Fusion

-

ToF point clouds can be fused with RGB cameras, LiDAR, and IMU data to achieve visual SLAM + point cloud semantic understanding.

-

Multi-source fusion improves perception accuracy and robustness, particularly in mixed indoor-outdoor environments, dynamic settings, and all-terrain navigation.

-

-

Enhanced Autonomous Decision-Making

-

With semantic understanding, ToF-provided 3D depth information enables robots to not only detect obstacle locations but also understand object types and functions.

-

For example, indoor navigation robots can determine whether a door can be opened or if stairs are passable; warehouse robots can assess the safety of stacked goods.

-

3. Application Scenarios

-

Intelligent Warehouse Robots

-

Use ToF point clouds to generate accurate 3D maps of shelves, combined with semantic understanding to recognize item types for automated picking, stacking, and transport.

-

-

Service Robots

-

In malls, hotels, or exhibition halls, robots fuse ToF and visual data to detect people, plan paths, and interact with the environment.

-

-

Autonomous Driving and Outdoor Mobile Robots

-

ToF sensors detect pedestrians, vehicles, and obstacles in real time. Semantic understanding identifies traffic signs and road structures for safe navigation.

-

-

Industrial Inspection Robots

-

ToF + semantic point cloud technologies help robots identify equipment, pipelines, or hazardous areas in complex factory environments for automated inspection and anomaly detection.

-

4. Trends and Technological Advantages

-

High Precision, Low Latency: ToF provides real-time 3D depth data, enhancing the speed and accuracy of autonomous decision-making.

-

Indoor-Outdoor Versatility: Works reliably in complex outdoor lighting as well as low-texture indoor warehouses.

-

Intelligent Obstacle Avoidance and Path Optimization: Semantic analysis and dynamic obstacle prediction enable autonomous navigation and safe operation.

-

Multi-Robot Collaboration: Sharing semantic + ToF data enables coordinated task planning, improving industrial automation efficiency.

III. Robotic Applications of Semantic Understanding

1. Autonomous Vehicles

-

Companies: Waymo, Baidu Apollo, etc.

-

Tech Stack: LiDAR + ToF + cameras + deep learning semantic segmentation.

-

Function: Identify pedestrians, vehicles, and traffic signs for urban navigation and intelligent decision-making.

2. Smart Warehouse Robots

-

Companies: Ocado, JD warehouse robots.

-

Tech Stack: Visual SLAM + deep learning + ToF point cloud recognition.

-

Function: Identify goods on shelves for precise picking, enhancing warehouse automation efficiency.

3. Service Robots

-

Examples: Pepper, Temi.

-

Function: Recognize environmental objects and human behavior through semantic understanding for human-robot interaction, emotional response, and intelligent service.

4. Advantages of Combining ToF and Semantic Understanding

-

Enhanced Environmental Comprehension: Robots understand object geometry, functionality, and interaction characteristics.

-

Improved Navigation Intelligence: With SLAM, robots can perform real-time path planning and obstacle avoidance in dynamic indoor and outdoor environments.

-

Adaptation to Complex Environments: Maintains high-precision perception under varying light, low-texture surfaces, reflections, and dynamic obstacles.

-

Increased Task Efficiency: Robots can autonomously plan tasks in logistics, inspection, and service scenarios, reducing human intervention.

Summary

By combining ToF point cloud detection with semantic understanding, modern mobile robots can achieve accurate mapping, intelligent recognition, and dynamic obstacle avoidance in complex and dynamic environments. This integration is driving advancements in autonomous driving, smart warehouses, and service robotics, providing more efficient and reliable solutions for autonomous navigation and intelligent operations.

Synexens 3D Of RGBD ToF Depth Sensor_CS30

Our professional technical team specializing in 3D camera ranging is ready to assist you at any time. Whether you encounter any issues with your TOF camera after purchase or need clarification on TOF technology, feel free to contact us anytime. We are committed to providing high-quality technical after-sales service and user experience, ensuring your peace of mind in both shopping and using our products.

-

Postado em

CS30