TOF-LiDAR Fusion: Enabling Global Perception for Autonomous Driving

How can TOF and LiDAR work together to address the challenges of short-range and long-range perception in autonomous driving?

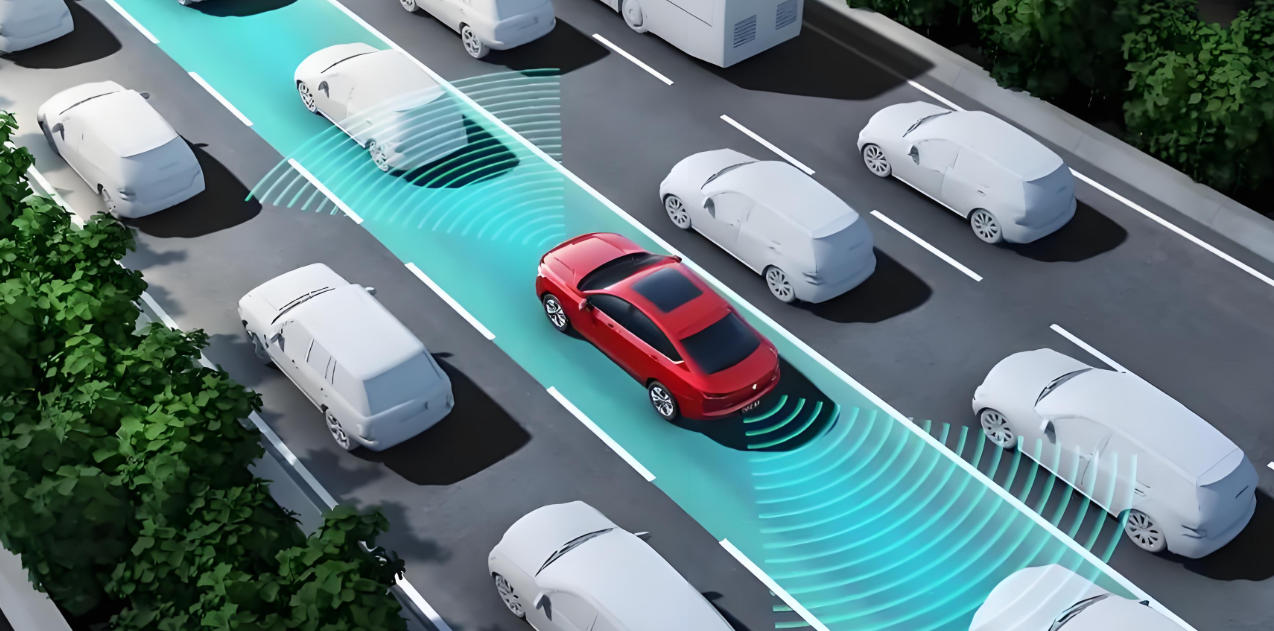

With the rapid development of autonomous driving, vehicle perception capabilities have become a critical factor in ensuring driving safety. Currently, the most widely adopted perception solutions include cameras, millimeter-wave radar, LiDAR, and TOF (Time-of-Flight) sensors. However, a single sensor technology inevitably comes with limitations, making it difficult to achieve true global perception. Therefore, TOF–LiDAR fusion has emerged as an important development direction in the field of autonomous driving, providing higher accuracy and reliability for obstacle detection, environmental perception, and dynamic decision-making.

What is the meaning of autonomous driving?

'Autonomous Driving' refers to a vehicle’s ability to drive itself with little or no human intervention, relying on a combination of sensors (such as cameras, LiDAR, TOF, etc.), AI algorithms, control systems, and high-definition maps.

In simple terms, it means a car can “see the road, make judgments, and take actions” like a human, thereby achieving self-driving, obstacle avoidance, acceleration/deceleration, and steering.

According to the SAE (Society of Automotive Engineers) classification, autonomous driving is divided into six levels (L0–L5):

-

L0: Fully manual driving

-

L1: Driver assistance (e.g., cruise control)

-

L2: Partial automation (e.g., Tesla Autopilot)

-

L3: Conditional automation (driver can hand over control in specific scenarios)

-

L4: High automation (vehicle drives itself in most cases with minimal human intervention)

-

L5: Full automation (no human driver required in all scenarios)

1. Current Status of Autonomous Driving Perception Technologies

In the development of autonomous driving, environmental perception is the foundation for ensuring safety and enabling intelligent decision-making. At present, most autonomous driving systems rely on cameras, millimeter-wave radar, or LiDAR either individually or in simple combinations. While each sensor type has its strengths, they also come with inherent weaknesses, making comprehensive global perception extremely challenging in complex traffic environments.

-

Cameras: Cameras provide high-resolution images similar to human vision, excelling in object recognition, traffic sign detection, and lane marking identification. However, they are highly dependent on lighting conditions and perform poorly in nighttime, backlight, or adverse weather (rain/snow), leading to missing or inaccurate data.

-

Millimeter-wave radar: This sensor type performs reliably in speed measurement and distance detection, making it suitable for applications like collision avoidance and adaptive cruise control. However, its relatively low resolution limits its ability to identify small obstacles or complex road scenarios, which can result in misclassification.

-

LiDAR: LiDAR is considered the “core eye” of autonomous driving due to its powerful 3D mapping capability. It generates precise point cloud data for reconstructing the environment. However, LiDAR has blind spots in close-range detection, its performance may degrade in harsh weather, and its high cost remains a barrier to large-scale adoption.

Because of these limitations, a single sensor cannot meet the strict safety and robustness requirements of higher-level autonomous driving. In urban congestion, highways, or poor weather conditions, relying solely on one type of sensor may lead to missed detections, false positives, or even sensor failure.

As a result, the industry widely agrees that the future lies in multi-sensor fusion. By combining TOF sensors, LiDAR, cameras, and millimeter-wave radar, and leveraging advanced fusion algorithms with AI-driven processing, autonomous driving systems can achieve complementary advantages across short-, medium-, and long-range perception. This approach not only improves obstacle recognition accuracy but also enhances adaptability to diverse environments, significantly reducing accident risks and laying the groundwork for full autonomous driving.

2. Complementary Advantages of TOF and LiDAR

Within the perception system of autonomous driving, no single sensor can simultaneously handle high-precision short-range recognition and large-scale long-range scanning. This makes sensor fusion a necessary path forward. Among the many options, TOF sensors and LiDAR stand out as complementary technologies, increasingly forming a synergistic perception framework.

The Value of TOF Sensors

TOF (Time-of-Flight) sensors calculate distances by emitting light pulses and measuring their return times, offering exceptional short-range precision.

-

In autonomous driving scenarios, TOF can detect fine-grained details around the vehicle, such as pedestrian gestures, cyclists’ posture changes, low curbs, or even small pets and dropped objects.

-

This high-resolution near-field capability is critical for solving the “blind spot problem.” In scenarios like low-speed parking, narrow street turns, or navigating underground garages, TOF plays an indispensable role.

-

Unlike cameras, TOF is less affected by lighting, maintaining reliable depth perception in nighttime or backlit environments, ensuring greater system robustness.

The Advantages of LiDAR

By contrast, LiDAR (Light Detection and Ranging) is known for its long-range detection and wide-area scanning capabilities.

-

Its greatest strength lies in generating accurate 3D point clouds across distances of hundreds of meters, creating a comprehensive 3D world model.

-

On highways or in open spaces, LiDAR enables early detection of distant vehicles, pedestrians, and road boundaries, giving the system sufficient time to react.

-

Additionally, LiDAR offers strong resistance to environmental interference. It remains relatively stable under challenging conditions like light rain or fog, maintaining a degree of detection reliability.

The Fusion Advantage of TOF and LiDAR

When TOF and LiDAR are combined, they create a multi-layered, complementary perception system:

-

Short-range: TOF captures precise details, filling in LiDAR’s blind spots in close-range recognition. For example, when driving through crowded urban streets, TOF can detect pedestrians or objects that suddenly appear near the vehicle.

-

Long-range: LiDAR constructs wide-area maps, enabling the system to perceive global scenarios and allowing vehicles to make early braking or avoidance decisions at high speeds.

The integration goes beyond distance complementarity—it enhances data richness. TOF measurements can be fused with LiDAR point cloud data, and after processing by AI algorithms, the system can:

-

Improve obstacle detection accuracy through cross-validation of multi-source data.

-

Enhance dynamic target tracking with more precise trajectory predictions for moving objects.

-

Increase redundancy and reliability, ensuring that if one sensor’s performance drops, the other can compensate.

Application Prospects and Significance

As autonomous driving progresses toward higher levels, sensor fusion is becoming an inevitable trend. The TOF–LiDAR combination enables vehicles to achieve near-field and far-field “no blind spot” global perception, with key benefits including:

-

Improved urban traffic safety by identifying potential risks in complex environments.

-

Enhanced automated parking through precise vehicle posture control in tight spaces.

-

Greater highway reliability by predicting hazards earlier and planning safe maneuvers.

Ultimately, this fusion approach significantly strengthens both the safety and reliability of autonomous driving, providing a solid technical foundation for the realization of L4 and L5 fully autonomous vehicles.

3. Fusion Algorithms and Data Processing

The fusion of TOF and LiDAR is not simply a hardware overlay but rather a system-level integration involving complex algorithms, time synchronization, and multi-source data processing. To fully unlock their complementary potential, advanced fusion algorithms and intelligent data processing techniques are required to achieve the high-precision perception needed for autonomous driving.

TOF and LiDAR Fusion: A Key Technology for Global Perception in Autonomous Driving

1. Multi-Source Data Fusion: From Point Clouds to Semantic Scenes

Different sensors vary in terms of resolution, sampling frequency, detection range, and noise characteristics.

-

TOF sensors specialize in high-precision near-field point clouds, achieving centimeter-level or even finer depth measurements.

-

LiDAR generates dense long-range 3D point clouds, capable of covering ranges up to several hundred meters.

Through deep learning and multi-modal fusion algorithms, the system can align and match data from both sensors, creating a 3D environmental model that contains detailed near-field features as well as long-range global coverage. Furthermore, this fused data can be combined with camera-based semantic recognition, forming a high-dimensional scene representation with both geometric precision + semantic understanding. This not only reduces redundancy but also improves the overall efficiency of perception.

2. Obstacle Detection and Intelligent Classification

In obstacle recognition, fusion algorithms enable multi-layered fine-grained processing:

-

TOF provides high-resolution features of small, close-range obstacles such as children, pets, traffic cones, and curbs.

-

LiDAR delivers long-range object contours and spatial positioning, such as vehicles, pedestrians, and road boundaries hundreds of meters away.

By integrating machine learning classifiers, the system can perform accurate semantic labeling and category differentiation of various obstacles. For example, it can distinguish between a 'moving pedestrian' and a 'stationary roadblock,' while assessing their potential threat levels. This significantly reduces false positives and missed detections, improving the robustness of autonomous driving in complex urban environments.

3. Dynamic Object Tracking and Path Prediction

Autonomous vehicles must operate within highly dynamic traffic environments.

-

TOF excels in high-frame-rate near-field perception, capturing rapidly changing details in real time.

-

LiDAR excels in wide-range coverage, providing trajectory extension and spatial data for distant targets.

Through Kalman filtering, Bayesian estimation, and deep prediction networks, the fusion system can perform millisecond-level trajectory tracking and motion prediction of pedestrians, cyclists, and other vehicles. Based on this, vehicles can conduct dynamic path planning, emergency braking, or evasive maneuvers, significantly enhancing real-time responsiveness, safety, and driving smoothness.

4. Global Perception and Intelligent Decision-Making

AI-powered multi-source sensor fusion not only solves the limitations of single-sensor systems but also drives autonomous driving from “local perception” toward true global perception:

-

Multi-scale fusion: seamless coverage from centimeter-level near-field to hundred-meter-level far-field.

-

Multi-layer modeling: from geometric point clouds to semantic scenes, enabling deeper environmental understanding.

-

Decision-making support: fused data feeds into the autonomous driving decision layer, allowing vehicles to perform optimized path planning and risk assessment based on global information.

Ultimately, vehicles will no longer merely 'sense the environment,' but instead actively conduct scene understanding and behavior prediction, resulting in a higher level of environmental adaptability.

5. Future Trends and Technology Roadmap

With advances in computational power and algorithm optimization, TOF + LiDAR fusion with AI is emerging as the core technological pathway for next-generation autonomous driving:

-

Hardware: Integrated solutions combining lightweight TOF sensors with solid-state LiDAR will reduce cost and power consumption.

-

Algorithms: Transformer-based architectures and large-scale multimodal fusion models will further improve accuracy and robustness.

-

Applications: Rapid adoption in urban mobility, highways, delivery robots, and intelligent parking scenarios.

This intelligent data-processing paradigm enables vehicles to evolve from passive 'environment sensing' toward proactive 'scene understanding,' laying a solid foundation for L4 and L5 fully autonomous driving.

6. Industry Applications and Use Cases

As autonomous driving technology advances, TOF-LiDAR fusion has already demonstrated strong potential across multiple real-world scenarios. Their complementary strengths enhance overall perception and provide safer, more efficient solutions for complex traffic environments.

-

Autonomous Vehicles

On highways, city roads, and tunnels, fusion systems leverage TOF for high-precision near-field sensing and LiDAR for long-range scanning, achieving global perception. For instance, LiDAR can identify vehicles and obstacles hundreds of meters ahead at high speeds, while TOF ensures accurate recognition of nearby hazards during lane changes or emergency maneuvers. This complementary sensing reduces collision risks and enables smoother path planning. -

Unmanned Delivery Vehicles

In logistics and last-mile delivery, robots or autonomous couriers often navigate residential areas, campuses, and pedestrian-rich zones. TOF sensors are critical for detecting close obstacles such as low-lying packages, children, or pedestrians’ legs, while LiDAR supports route planning and collision avoidance. This fusion approach enhances adaptability and safety, powering smart logistics and automated delivery. -

Complex Scenarios

In underground parking lots, congested roads, or dense urban areas, single-sensor systems often struggle to deliver reliable perception. TOF provides fine-grained near-field point cloud data for detecting pillars, walls, and low obstacles, while LiDAR offers global spatial mapping. Together, they ensure safe navigation even under poor lighting or signal interference.

These applications show that TOF-LiDAR fusion is moving beyond theoretical research into real-world deployments. Whether in autonomous cars, delivery robots, or high-complexity urban mobility, the technology has proven its significant value. With ongoing advances in hardware and algorithms, sensor fusion will become an industry standard and drive autonomous driving toward higher levels of intelligence.

7. Future Outlook

As autonomous driving progresses, reliance on a single sensor type is no longer sufficient. The TOF + LiDAR + AI triad will form the cornerstone of fully autonomous driving, representing not just a sensor upgrade, but a systemic technological evolution.

-

Sensor Complementarity with AI Empowerment

TOF sensors fill LiDAR’s blind spots in near-field perception, while LiDAR supplies long-range environmental modeling. AI serves as the “brain,” integrating and reasoning over multi-source data to create complete and continuously updated environmental models. -

Global Perception and Intelligent Decision-Making

Vehicles will transition from localized sensing to true global perception, allowing them to understand both near-field details and far-field dynamics under complex conditions. This ensures safer, more intelligent decision-making across highways, urban roads, and adverse weather. -

Vehicle-to-Infrastructure (V2X) and Smart Transportation Ecosystems

With the rise of smart cities, autonomous vehicles will need to interact with external traffic infrastructures. TOF and LiDAR fusion, enhanced with AI and V2X, will enable vehicles to receive real-time road, traffic, and vehicle information, facilitating cooperative driving and intelligent mobility ecosystems. -

Future Prospects

As hardware costs decline, AI algorithms evolve, and computational platforms improve, TOF-LiDAR fusion will likely become a standard configuration across the autonomous driving industry. Beyond vehicles, the technology will extend to smart cities, delivery services, and public transportation, accelerating the digital and intelligent transformation of mobility.

Conclusion

TOF-LiDAR fusion is not only a technological innovation but also an inevitable pathway toward the maturity of autonomous driving. Leveraging their complementary advantages, fusion perception enables true global awareness, delivering breakthroughs in obstacle detection, dynamic monitoring, and complex-scene adaptability. With deeper integration of AI and big data, TOF and LiDAR fusion will play a pivotal role in shaping the future of autonomous driving and intelligent transportation.

Synexens Industrial Outdoor 4m TOF Sensor Depth 3D Camera Rangefinder_CS40p

After-sales Support:

Our professional technical team specializing in 3D camera ranging is ready to assist you at any time. Whether you encounter any issues with your TOF camera after purchase or need clarification on TOF technology, feel free to contact us anytime. We are committed to providing high-quality technical after-sales service and user experience, ensuring your peace of mind in both shopping and using our products.